Ok. So I did the game jam and the results are … well not that great but I believe the ratings are inline with what was delivered. Now that the Jam is over I’d like to take some time to reflect on my choices made during the Gam Jam.

The Experience

This was a great learning experience and the provided feedback is a good starting point for improving the project going forward. Most of the feedback was for things that I wanted to do but just ran out of time.

The fact that I even published something to Itch and participated in the jam is a morale boost and it feels like a huge blocker has been removed.

It was also impressive to see what other people were able to do in the same time (and in some cases much less time).

The Engine

I decided to use Bevy, a game engine written in Rust, for this entry. This was mainly because I was learning Rust for another opportunity and Bevy seems to be the strongest choice for the language.

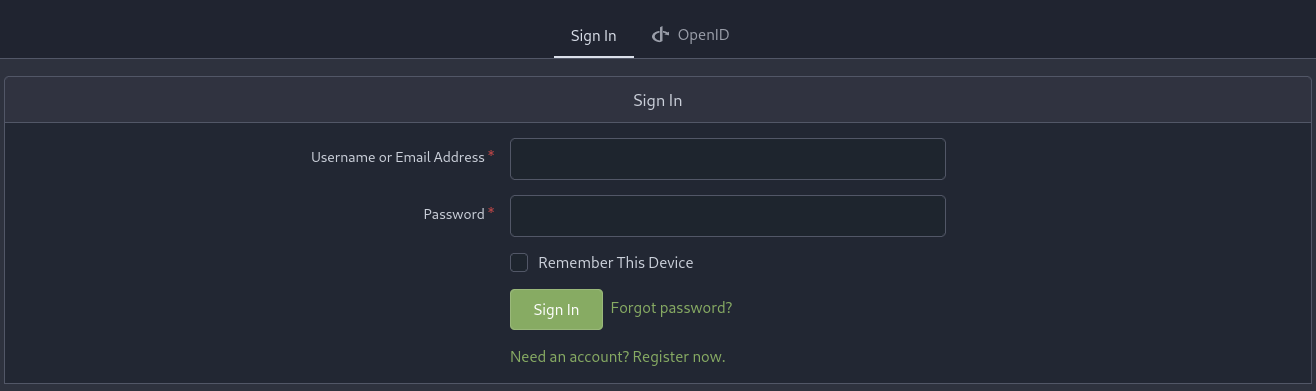

I could have made more progress and delivered a better game with Unity due to prior experience and the much better documentation and ecosystem available for the engine. But Unity is a heavy choice. You have to create an account, download Unity Hub, install a decent IDE, and write in C#. All of which I didn’t want to do for this Game Jam.

Godot was also an option and one that I seriously considered but then I would have had to learn their specific language. Rust is available but I’m not sure if one should learn Godot with Rust. This is definitely an engine that I want to learn and use. And likely the engine I would have pick if I were making a real game and could allocate the time to learning it properly.

The choice to go with Bevy definitely had some negative consequences for me. Theses consequences were mainly negative because of the time constraints of the Game Jam.

First of all Bevy is still a very much in-development engine. There are gaps in the documentation that is expected to be filled by looking at the examples. This is great if there is an example for your specific problem but if not there may not be documentation to fall back on. The Unofficial Bevy Cheat Book is an excellent resource that also helps fill in the documentation gaps.

Bevy itself is implemented as various plug-ins. Almost all components of the engine can be enabled, disabled, swapped out, or customized as needed. This is extremly powerful but can make it difficult to find where a change needs to be made.

Bevy to my knowledge does not include a 3d collision or physics library. The 3rd party library, Rapier, fills this gap but I did not have time to learn it for this Game Jam. The current build just treats all entities as points on a 2D-plane.

The Concept

I wanted to build a game around a dashing mechanic. The character would be free to walk on the X and Z axis but would not be able to move along the Y axis (no jumping). The character’s main attack would be to dash forward and knock enemies back. This attack could be charged to do more damage. Stronger enemies requiring the strong attack to push them back.

The enemies themselves would do damage to the player character when they or their projectiles touched the character. The player would take damage as long as they were in contact with the enemies. The player’s knock back attack would both move the enemy away from the player (break contact) and do damage to the enemy.

Random power ups would appear on the screen that would provide shields, restore energy, and etc.

Energy would be the main resource for the game. Energy would constantly drain during the session but could be restored by collecting power ups. Once all of the energy was drained the game would be over. The basic concept is that you are in a hostile environment and energy was needed to keep you functioning (repair shields or armor, maintain life support, etc)

The main loop for the game was that the player was trying to stay alive for as long as possible by avoiding or knocking the enemy back.

Avoidance would have been the main strategy due to the contact damage. Players would have to determine if an attack was worth it not while also managing the number of enemies they allowed to exist. Dashing into a large group of enemies would be problematic due to the contact damage from each enemy. The player would have to effectively utilize power up and dashing to get the best surivival time.

The Compromises

This wasn’t a very complicated concept and one that I thought that I could finish in the alloted time. The main risk in this project was using Rust, my newest language, and Bevy, an engine that I had no serious experience with, instead of Unity or even Godot. However life found a way to interfere with my plans and unfortunately this did force me to drastically reduce the scope of what I was working on.

It may surprise you but “Pumpkins are the only hope” was not the first title for this project. Late into the jam I ran into a problem with the original assets that I was going to use. I needed a new set of coherent assets and I ended up picking a few assets from Kenney Game Assets and muttering “These pumpkins are the only hope of finishing”.

The Results

What I ended up with is what I would label a prototype or a proof-of-concept that shows that I can use Bevy to create a game. It’s going to take more work and effort to learn the engine and its ecosystem of plug-ins but it is possible. It wasn’t a full game nor what I envisioned when I started but I’m glad that I was able to submit something for this Game Jam.

What’s next

- take time to build a prototype that is more in-line with the original vision.

- provide an in-browser version. Bevy supports this but I ran into an issue where sound wouldn’t play. The game needs to be launched from a user action but I didn’t know how to do this at the time.

- prototype the game in Godot.

- Look into AppImage or FlatPak. I didn’t want to distribute as an executable in a gzip archive but I ran out of time.

Favorite Entries

Cosmic Courier Cameron

Spacemail Chimp+

I played both of these games far longer than I probably should have. But time well spent.