I’ve been running a self-hosted instances of GitLab for a few months. In general I’m happy with GitLab but it’s fairly resource intenseive for my usage so I decided try Gitea as a lightweight alternative.

This covers setting up Docker Compose to run with rootless Podman on a local machine so HTTPs, security, and other settings are out of scope for this post.

Sources

I used the below sources to install the test instance.

Running Docker Compose with Rootless Podman

Installation with Docker (rootless)

Install and configure Podman and Docker Compose

sudo dnf install -y podman podman-docker docker-compose

systemctl --user enable podman.socket

systemctl --user start podman.socket

systemctl --user status podman.socket

export DOCKER_HOST=unix:///run/user/$UID/podman/podman.sock

echo 'export DOCKER_HOST=unix:///run/user/$UID/podman/podman.sock' >> $HOME/.bash_profile

Configure Gitea docker-compose.yaml

I’m going to deploy with Gitea using MySQL as the backing database. All the volumes will be local volumes so we don’t have to worry about permissions. Make sure you change the example credentials for both MySQL and Gitea.

First create a folder for your compose file and cd into that directory

mkdir -p gitea

cd gitea

touch docker-compose.yaml

Copy the below example docker-compose.yaml into the docker-compose.yaml file.

#docker-compose.yaml

version: "2"

volumes:

gitea-data:

driver: local

gitea-config:

driver: local

mysql-data:

driver: local

services:

server:

image: docker.io/gitea/gitea:1.20.1-rootless

environment:

- GITEA__database__DB_TYPE=mysql

- GITEA__database__HOST=db:3306

- GITEA__database__NAME=gitea

- GITEA__database__USER=gitea

- GITEA__database__PASSWD=gitea

restart: always

volumes:

- gitea-data:/var/lib/gitea

- gitea-config:/etc/gitea

ports:

- "3000:3000"

- "2222:2222"

depends_on:

- db

db:

image: docker.io/mysql:8

restart: always

environment:

- MYSQL_ROOT_PASSWORD=gitea

- MYSQL_USER=gitea

- MYSQL_PASSWORD=gitea

- MYSQL_DATABASE=gitea

volumes:

- mysql-data:/var/lib/mysql

After you have created the docker-compose.yaml file and modified it to your liking simple run docker-compose up to start the Gitea instance. After a few seconds the instances will be ready to configure. If you’ve kept the default ports in place simply nagivate to http://localhost:3000 to finalize the Gitea installation. You should see a screen similar to the below image.

You can accept the defaults or modify the configruations as necessary. Once you are satisfied with the instance you can click one of the two Install Gitea buttons at the bottom of the page.

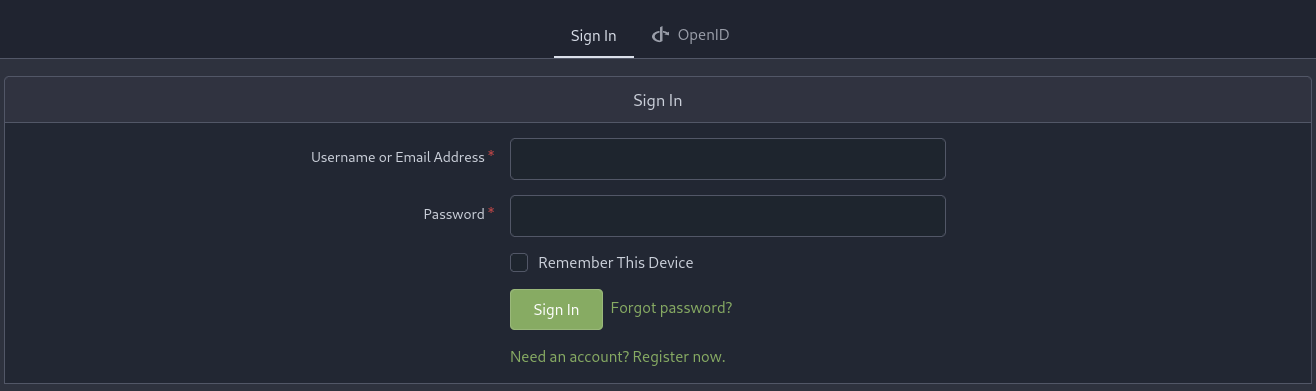

Once the server has finished the installation process your browser will refresh to the login page.

Click the Need an account? Register now. link and create the first user. This user will be the admin user.

Login as this user to perform any additional server setup and create users.

Tear Down

Once you are finished with the test instances you can shut it down by running

docker-compose down

this will stop the containers but preserve the volumes created in the docker-compose.yaml file. If you want to delete the volumes as well you can either delete them using the podman cli or by running docker-compose down --volumes.